We are in the era of AI. I was surprised the first time I used ChatGPT by how real the text and answer were, but I was yet to be impressed by the real meaning of the content.

For me, it was just a pattern recognition software for text. I also tried Dall-e, and it was basically a gimmick in my opinion.

Today I tested Midjourney, and it’s the first time I’ve been impressed by AI or machine learning. We will see in this article why you should be excited but also worried about this innovation.

OUR SPONSOR OF THE DAY : NEONNIGHT.FR

What is Midjourney?

Midjourney is basically an AI that can generate original pictures and illustrations using existing pictures or simply a prompt.

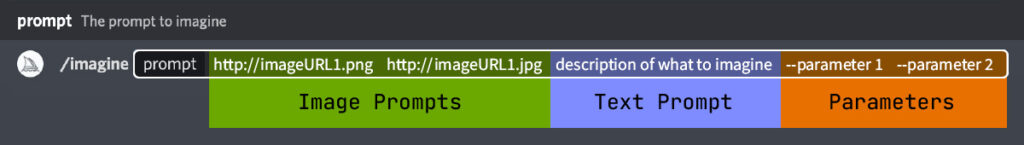

What is a prompt ?

A prompt is simply a command line to generate an image. To generate an image, the prompt starts with the /imagine prompt, and the rest can be freely written.

You still have to respect order, and being lexically correct and easily understandable will help as well.

How does it work ?

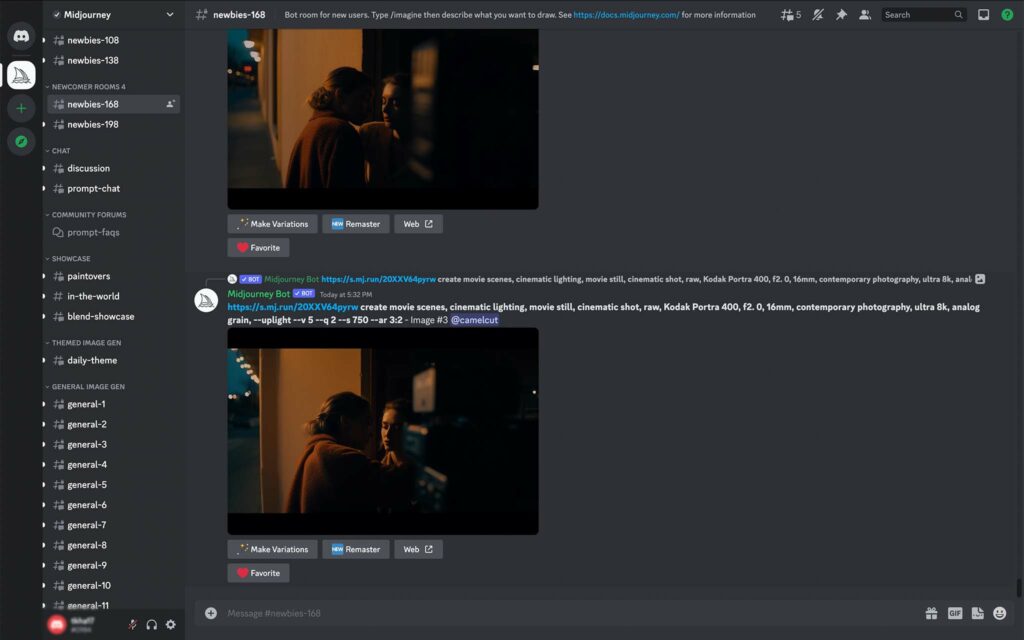

Unlike ChatGPT, Midjourney is using Discord chat to register the prompt and manage the user interface.

I have to say that I was at first reluctant to download an additional app to try it, but in the end, it all makes sense since you’ll be able to have a quicker learning curve thanks to other users.

You will also need a subscription; again, it used to be free, but generating this many images must be energy-intensive and costly. So you’ll have to pay at least 8 or 10 dollars a month to use the service.

Note that images generated are public, and everyone in the same room will be able to see what you are trying to create. There are also rules to follow to avoid creating virulent content.

Is it hard to use ?

Compared to ChatGPT, I would say getting good results is a bit more technical, but I think you’ll be blown away real quick by the results.

The hardest part for me is to tweak and refine the results; you usually need more than one try, which is why they give you four examples.

Once you get your examples, you can either rephrase your prompt, upscale an image, or vary one of the pictures until you get something you like.

It’s quite easy to use, even if you need to understand what each button means first, it’s so engaging that you’ll most likely end up knowing.

Some examples

Beautiful woman Model walking in the stairs of Montmartre Paris, in the style of epic fantasy scenes, dressed with a beige trench coat, canon 5D mark VI, national geographic photo, hyper realisticFor my first prompt, I tried to picture one of the shots my friend and I are preparing for a perfume commercial. I have to admit that it wasn’t that far from my imagination.

The location didn’t look real, even if it had the right idea about the place. Or it painted the basilic of Montmartre in the background but it looked like a composite picture.

Beautiful asian woman Model walking in place du Trocadero Paris, dressed with a beige trench coat and high heels, Arri Alexa, hyper realisticAfter my first results, I tried to be more specific about the ethnicity of the model and the shoes, and the results were convincing, but still, because of my poorly written prompt, the computer generated all the clothes in beige. Semantic is quite important to get an accurate result.

Beautiful asian woman Model walking in place du Trocadero Paris at night, in the style of Nicolas Winding Refn, with neons, dressed with a beige trench coat, white shirt, and black high heels, Arri Alexa, hyper realisticJust to fool around, I’ve even tried to put some styling of director Nicolas Winding Refn in the picture. If the AI got the main idea, the execution was not very aesthetic. I think uploading a picture would yield better results.

Handsome Korean actor posing inside an haussmannian appartement in Paris, dressed with a navy double breasted suit. Sitted and drinking a glass of Macallan Whisky at night with a view of the eiffel tower, Arri Alexa, hyper realistic, David Fincher’s styleThis is where I started to worry about my job as a photographer. These pictures are very close to what I was imagining. I would actually be willing to use these pictures and find them to be of good quality. The lightning seems very realistic and at no point the picture seems to be composites out of many pictures.

I don’t know if a computer is calculating those things or if this is just highly inspired by someone else’s work.

A beautiful Vietnamese woman of 29 years old with pale skin and long dark hair waiting for the bus, she is wearing a thin beige trench coat, with a navy blue dress, gold necklace and ring, high heel black shoes, wearing a expensive Gucci bag, she is holding a two year old asian baby boy with a self haircut. Behind is the bir hakeim bridge in Paris.My wife tried to create a picture, and even if it wasn’t perfectly accurate to the prompt, it was still very close and very usable.

I think the pictures look great, and I would be happy if I took them. Just to think that this has been generated is quite insane.

Song Hye Kyo laying in a flower field during sunset, surrounded by white, pink, and light purple flowers. She is wearing a dress with flowers.The flower field is located inside the Cour Carré of the Louvre Museum. The image have to fit Louis Vuitton commercial style.I wanted to test the ability of Midjourney further by trying to use some real names and reproduce a concept by Louis Vuitton.

The pictures came out very nicely. I am honestly impressed by the quality of image you can get without having to invest in a set, in the flowers, the lights, the set, etc.

The pictures look real enough to be used as illustrations; that’s the scariest part since the technology is fairly new.

I think there’s no way on earth that this type of technology won’t eventually end up in programs like Unreal Engine 5. From there, it’s just a race until we have a simulation that is indistinguishable from reality.

Examples from other users

https://s.mj.run/20XXV64pyrw create movie scenes, by using reference photo, cinematic lighting, movie still, cinematic shot, raw, award winning photograph, Kodak Portra 400, f2. 0, 16mm, contemporary photography, Hasselblad, ultra 8k, Pentax 67, analog grain, --uplight --v 5 --q 2 --s 750 --ar 3:2Some other users were literally creating frames from a movie. These frames look like real screen caps from a movie. I still feel like these were not generated but composited out of another real screencap.

I can’t believe that AI is capable of producing something so real and unique.

Ghibli style, a girl, Mountain, forest, --niji --ar 16:9 - @HK (fast)One user even managed to produce some Ghibli-style anime screen caps. I don’t know how the AI does it, but it seems like he is using a database of a real anime by Hayao Miyazaki.

Examples using another picture

https://imd-human-transition.com/wp-content/uploads/2016/01/blog-lifestyle-6.jpg Man sitting in front of a lake and behind mountains, meditating trying to find inspiration during the summer, in the style of photo realistic, Kodak Portra 400, Mamiya RZ67 --3:2The AI understood the prompt and executed a perfectly inspired picture with the same idea in mind. It even used a similar color palette. Forget about copyright removers; this is way above and beyond.

Getting inspiration from an existing commercial

Pushing the vice a little further is it possible to reproduce a picture and only changing the model ?

https://s.mj.run/AUJw_5dddfM, beautiful asian model laying in a flower field during sunset, by using reference photo, Kodak Portra 400, Pentax 67, analog grain

The results are very convincing. Perhaps I made the mistake of forgetting the proper ratio written in Ar 3:2. I think this tool could easily be used to storyboard or moodboard a project for a video.

The limitations

I don’t pretend to know how this technology works—is it painting a picture from scratch or is it compositing multiple pictures?

My theory is that the latest is the answer. I already had some watermarks on my generated picture, but they weren’t readable. The words almost looked retouched with the stamp tool in Photoshop.

I think most qualitative content requires very specific ideas with a high level of detail. It doesn’t matter how much you improve your prompt; if there are too many details, it will be hard for the AI to produce the picture.

Midjourney doesn’t really seem to know places; for example, if you are looking for a specific part of Paris, it might mix places, and monuments never look genuine. Almost as if they had a copyright infringement rule. The same goes with people and objects. You can still recognize them, but there are some slight differences that make them original.

mads mikkelsen as a russian aristocrat hunting in the jungle posing as a portrait looking at the camera in a menacing look with a gun --ar 16:9The issues

The problem with Midjourney is that as soon as you really look closely at a picture, you’ll find some composite errors. For example, a person may miss a finger or a leg, a light will be floating in the air, or there will be something that doesn’t add up or make any sense in the picture.

These little failures are very hard to disguise or Photoshop as well.

OUR SPONSOR OF THE DAY : NEONNIGHT.FR

Verdict

8.5 out of 10.

I think Midjourney is finally at the point where AI is becoming a threat for artists. This will definitely impact the market for stock pictures in the near future.

For 8 dollars a month, you can generate hundreds of original pictures to use on your website as illustrations. For now, the pictures seem a tad generic and vague for specific content, they still have issues with fingers and details, but this is only the early stage of the product.

I am still a bit in shock about what this technology can do and what it might mean for photographers or illustrators. Of course, it’s near impossible for now to, let’s say, reproduce an existing person since the AI probably has ethical rules registered in its system.

Also, the level of precision is not enough for real projects that care about small details. It’s impossible to prompt with precision and not have some anomaly in the picture. We’ll see how this technology evolves, but it seems more than realistic that in less than a decade, this technology will have made mad leaps and bounds to the point of being used in most scenarios.

Informations

Midjourney

Website : https://www.midjourney.com/

Discord Midjourney : https://discord.com/invite/midjourney

Discord website : https://discord.com

Video Production : https://www.neonnight.fr/en/

GIPHY App Key not set. Please check settings